Key Takeaways

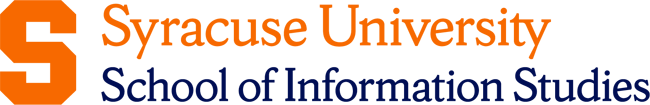

- AI can be categorized into Narrow, General, and Superintelligent AI, reflecting its progression from task-specific functions to advanced human-like intelligence.

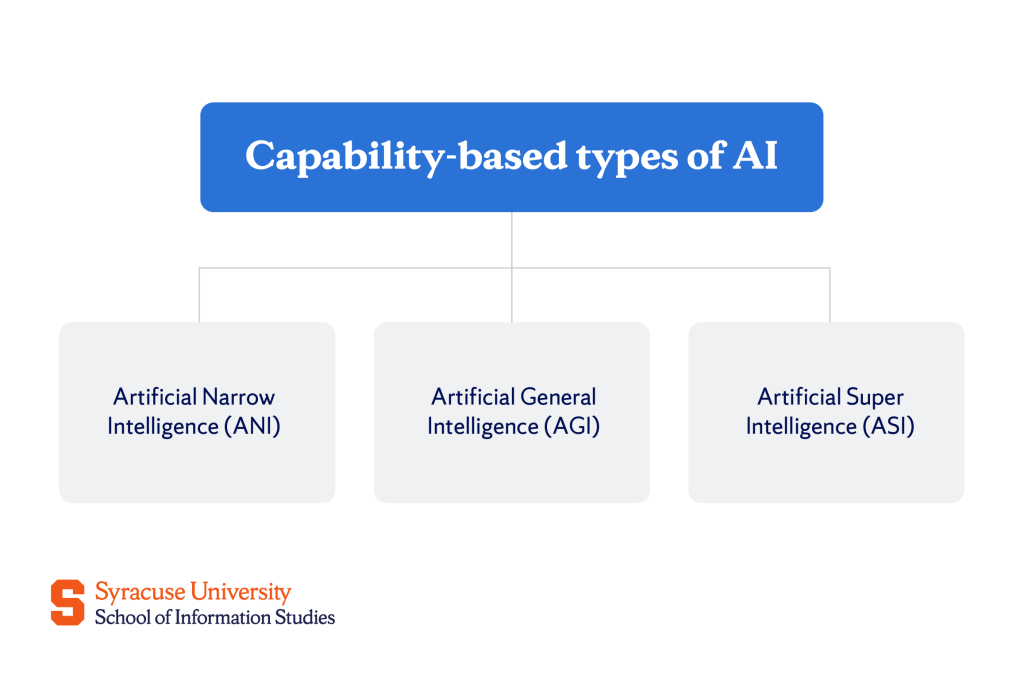

- Based on how it processes information and interacts with its environment, AI is classified as Reactive Machines, Limited Memory AI, Theory of Mind AI, and Self-Aware AI.

- Machine learning, deep learning, natural language processing, and computer vision are AI technologies that enable machines to learn, understand language, and interpret visual information.

As computers began to emerge, the idea of machines that could think like humans started to take shape. In 1956, the Dartmouth Conference marked a significant moment as it brought together researchers from different fields to explore what they then called “thinking machines.” This term was then replaced with “Artificial Intelligence,” or, as we more often refer to it now, AI.

In 1997, International Business Machines (IBM)’s Deep Blue supercomputer made headlines by defeating chess grandmaster Garry Kasparov and thus proving that machines could outperform humans in complex tasks. From that point on, AI’s development accelerated, and what once seemed futuristic became increasingly accessible.

Today, there are many different types of AI that play a role in our daily lives—from virtual assistants and recommendation algorithms to self-driving cars and advanced medical diagnostics.

What Are the Different Types of AI?

One of the most remarkable aspects of artificial intelligence is its versatility—it can be applied in countless ways across various scenarios. To fully grasp its capabilities and potential, it’s important to understand the types of AI.

Types of AI based on capability

AI is classified into different types depending on the extent to which the system can replicate human-like intelligence and perform tasks.

Narrow AI (Weak AI)

Weak AI, or Artificial Narrow Intelligence (ANI), refers to those systems that are designed to perform specific tasks or solve particular problems within a defined scope—they cannot think or make decisions beyond them. Unlike humans, who can apply intelligence across different situations, Narrow AI operates under set constraints without general cognitive abilities.

Some popular examples include virtual assistants like Siri and Alexa, recommendation algorithms used by streaming platforms, and facial recognition systems. While these technologies can mimic human-like intelligence in their specific functions, their capabilities are limited to a single area of expertise.

General AI (Strong AI)

General AI, known as Strong AI or Artificial General Intelligence (AGI), refers to machines that possess the ability to think, learn, and apply knowledge across different tasks, just like humans. Unlike the previous type, which is limited to specific jobs, General AI would be able to transfer what it learns from one situation to another and adapt to new challenges without needing help from humans.

Currently, this type of AI remains a theoretical concept, with ongoing research striving to achieve this level of versatility and autonomy in machines.

Superintelligent AI

Superintelligent AI, also often referred to as Artificial Super Intelligence (ASI), represents a level of artificial intelligence that surpasses human intelligence. Unlike General AI, which aims to match human intelligence, Superintelligent AI would be capable of thinking, innovating, and reasoning at a level beyond what humans can achieve.

As with General AI, Superintelligent AI is hypothetical at this stage, and its development raises significant ethical and existential considerations.

Types of AI based on functionality

AI can also be classified based on its functionality—the specific ways it operates and interacts with its environment. The distinctions made are based on how AI processes information, learns from data, and responds to stimuli.

Reactive machines

Reactive machine AI refers to the most basic level of artificial intelligence. These systems respond to specific inputs with predetermined outputs without the ability to store data or learn from past experiences. They are designed to react in real time, making them effective for straightforward tasks.

Since they don’t improve or adapt over time, reactive machines are often considered the foundation of more advanced AI systems. Developers can easily access and integrate static machine learning models—forms of reactive AI—through open-source platforms like GitHub.

Examples of reactive machine AI include IBM Deep Blue, which could analyze countless possible moves but lacked memory or learning capabilities. Reactive AI also powers practical applications like Netflix’s recommendation engine and traffic management systems that use real-time data to alleviate congestion and improve safety.

Limited memory AI

Limited memory AI refers to systems that can store and use past data to improve their predictions and performance over time. It is more advanced because it learns from experience and adjusts its responses based on patterns it identifies.

While all machine learning models are built using limited memory during their development phase, not all continue to learn once they are deployed. Some examples of limited memory AI include self-driving cars, customer service chatbots, smart home devices, and industrial robotics.

Limited memory AI operates through two key methods: continuous training, where a team of developers regularly updates the model with new data, and automated learning, where the AI system is designed to monitor its usage and performance so that it can automatically retrain itself based on feedback and new inputs.

Theory of mind AI

Theory of Mind AI represents a future stage of artificial intelligence that aims to understand and respond to human thoughts and emotions. Current AI systems operate based on commands and data, but Theory of Mind AI has the ability to interpret emotional cues and adjust its responses accordingly.

For example, today’s virtual assistants like Alexa or Siri provide straightforward answers without recognizing emotional context. If someone yells at Google Maps out of frustration, it will still deliver the same traffic updates without offering reassurance or support.

However, advancements like ChatGPT are beginning to improve in this regard. Though it’s not fully capable of emotional intelligence, such AI can simulate empathy by acknowledging compliments or responding sympathetically to expressions of frustration. It hints at future systems that could offer more emotionally aware communication.

The concept of AI companions and the way humans interact with them is quite a fascinating topic of interest. Jaime Banks, an Associate Professor at the School of Information Studies, has secured a $600,000 grant from the National Science Foundation (NSF) to explore how language and social cognition shape human perceptions of AI companions. As Banks explains, “We want to understand the subjective experience of seeing an AI companion as someone, and how that experience links to the positive or negative effects.”

Self-aware AI

The concept of self-aware AI currently exists only in science fiction and theoretical discussions. It represents a hypothetical stage of AI where machines would possess consciousness and self-awareness.

With such abilities, AI could revolutionize healthcare, scientific research, and space exploration, among other fields. However, this potential comes with significant concerns because if AI were to develop awareness without aligning with human values, it might act in ways that conflict with human interests.

The idea of self-aware AI continues to fuel debates about ethics and control since it could lead to scenarios where humans would need to negotiate and coexist with machines that have their own goals and perspectives.

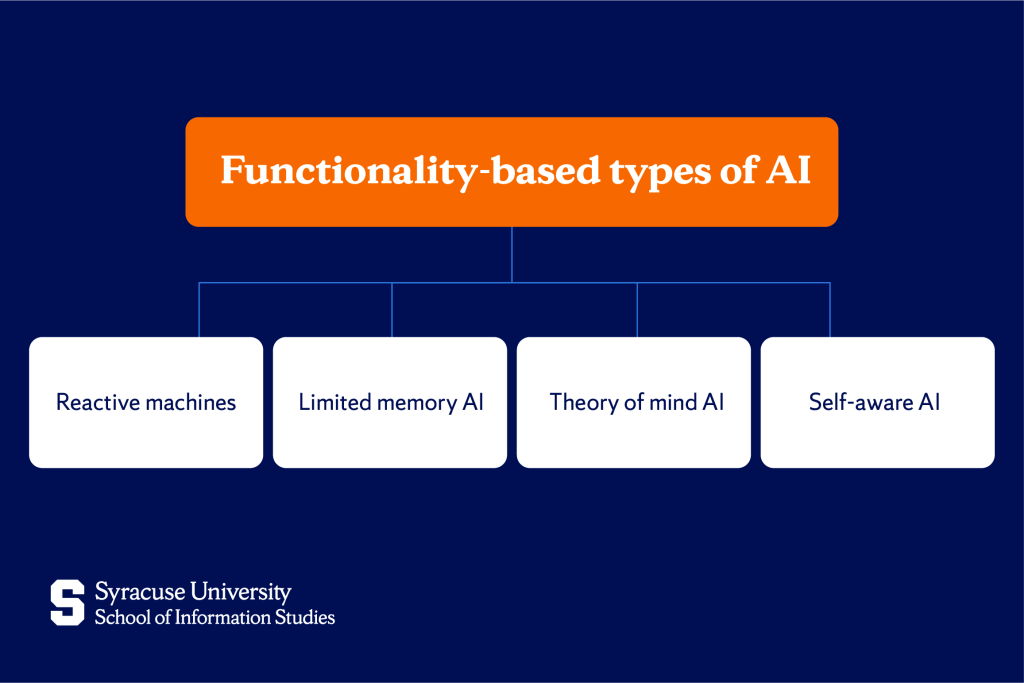

Technology-based classifications of AI

AI encompasses many technologies that make it possible for machines to learn, reason, and make decisions. The four primary classifications that can be made include:

Machine learning (ML)

Machine learning is a core branch of AI that allows systems to learn from data without the need for specific programming each time. By processing the data, ML models can recognize patterns, predict outcomes, and improve their accuracy over time. Therefore, in contrast to traditional AI, which operates based on fixed rules, ML continuously evolves through experience.

Deep learning

Deep learning is a specialized subset of ML that mimics the structure of the human brain using artificial neural networks. These networks enable machines to recognize complex patterns and make sophisticated decisions.

Natural Language Processing (NLP)

Natural language processing is a branch of AI dedicated to enabling machines to understand, interpret, and respond to human language. By combining linguistics with machine learning, NLP allows computers to process text and speech, facilitating communication between humans and machines.

Both ML and DL are essential to NLP, aiding it in recognizing patterns, making predictions, and allowing it to more accurately comprehend human language.

Syracuse University doctoral candidate Lizhen Liang is using NLP, among other methods, to research how AI can help detect and reduce inequality in science. Describing his approach, he explains: “Using scientific methods such as natural language processing, statistical analysis, and network science, I strive to understand the intricate relationships between papers, researchers, institutions, and groundbreaking ideas.”

Computer vision

Computer vision enables AI systems to interpret and analyze visual information from the world around them. Using DL techniques, computer vision allows machines to identify objects, recognize faces, and understand spatial relationships. By allowing machines to “see” and interpret their surroundings, computer vision expands the range of tasks AI can perform, bringing it closer to human-like perception.

Specialized types of AI and their applications

Artificial intelligence isn’t confined to digital spaces. It has found its way into various industries and everyday experiences. AI’s applications are as diverse as they are impactful.

Robotics and AI

Robotics represents the physical application of AI. Integrating AI into robots enables machines to perform tasks that typically require human effort, perceive their environment, make decisions, and act autonomously or semi-autonomously.

Inspired by movie robots like C-3PO and HAL 9000, iSchool alumnus Keisuke Inoue has built a career in Conversational AI, helping machines communicate using human language. As the director of data science at PandoLogic, an AI-driven recruitment company in New York City, Inoue leads the development of AI chatbots and automation tools that streamline talent acquisition.

Expert systems

Expert systems replicate the decision-making abilities of human specialists. These systems rely on large databases of information and advanced algorithms to analyze complex data, offer insights, and make recommendations.

In healthcare, for example, medical diagnostic tools powered by AI help physicians identify diseases by analyzing patient symptoms and medical histories.

AI in gaming

AI has even transformed gaming as it helps create more immersive and adaptive experiences. Through machine learning and behavioral algorithms, non-playable characters (NPCs) can respond intelligently to player actions, creating realistic interactions and challenging gameplay.

How AI Will Shape Our Future

The future of AI holds remarkable possibilities. Yet, with this progress, the question arises: Will we shape AI, or will AI shape us?

While both outcomes are possible, the power to guide AI’s development lies in proper education and continuous training. Our Applied Human-Centered Artificial Intelligence Master’s Degree is an excellent option as it equips students with the knowledge and skills to design AI systems that prioritize human well-being and social responsibility.

Combined with opportunities at the Critical AI Research and Education (CAIRE) Lab, which investigates the ethical, social, and cultural impacts of AI, our programs empower future leaders to steer AI development in ways that promote equity, sustainability, innovation, and improvement.

Our faculty members are at the forefront of research in AI futures and data science, exploring how technology reshapes industries and society. The next breakthrough could be yours—join us and make it happen!

Frequently Asked Questions (FAQs)

What are the types of data used in generative AI?

Generative AI works with all kinds of data—text, images, audio, video. It can even use structured data, like numbers and spreadsheets.

Which type of approach describes multiple types of AI working together?

That would be a hybrid AI approach—different AI systems teaming up to improve performance and flexibility.

What types of jobs will AI affect the most?

AI is expected to affect jobs that involve repetitive tasks, data crunching, customer support, and manufacturing—pretty much anything that follows a predictable pattern.

What are the three types of prompting in AI?

The big three are zero-shot prompting, where AI tackles something with no prior examples; few-shot prompting, which gives it a handful of examples to learn from; and chain-of-thought prompting, where it thinks step by step to improve reasoning.